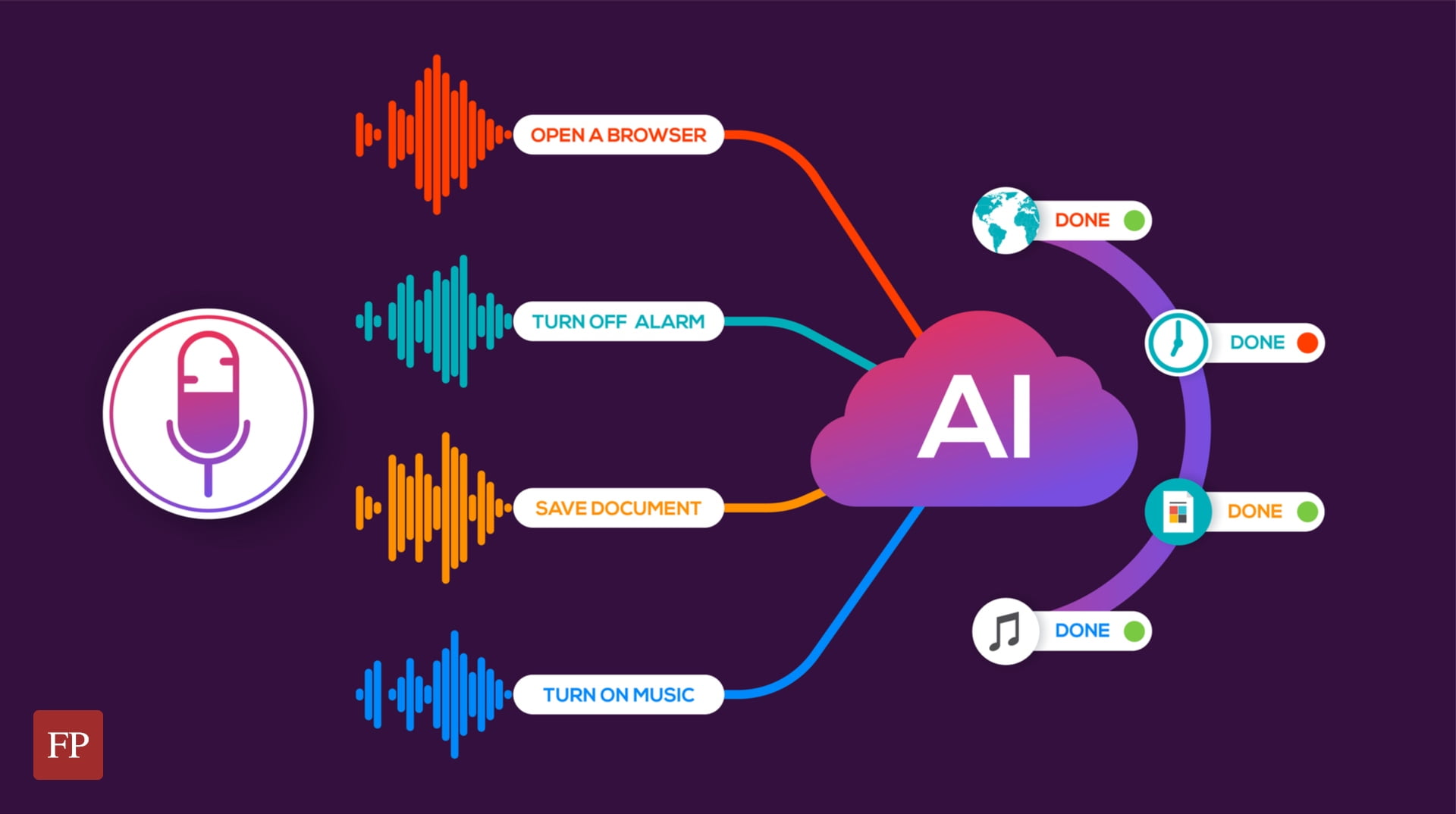

A speech-to-text (STT) system, or sometimes called automatic speech recognition (ASR) is as its name implies: A way of transforming the spoken words via sound into textual data that can be used later for any purpose.

Speech recognition technology is extremely useful. It can be used for a lot of applications such as the automation of transcription, writing books/texts using sound only, enabling complicated analysis on information using the generated textual files and a lot of other things.

In the past, the speech-to-text technology was dominated by proprietary software and libraries. Open source speech recognition alternatives didn’t exist or existed with extreme limitations and no community around.

This is changing, today there are a lot of open source speech-to-text tools and libraries that you can use right now.

Table of Contents:

What is a Speech Recognition Library/System?

It is the software engine responsible for transforming voice to texts.

It is not meant to be used by end users. Developers will first have to adapt these libraries and use them to create computer programs that can enable speech recognition to users.

Some of them come with preloaded and trained dataset to recognize the given voices in one language and generate the corresponding texts, while others just give the engine without the dataset, and developers will have to build the training models themselves.

You can think of them as the underlying engines of speech recognition programs.

If you are an ordinary user looking for speech recognition, then none of these will be suitable for you, as they are meant for development use only.

What is an Open Source Speech Recognition Library?

The difference between proprietary speech recognition and open source speech recognition, is that the library used to process the voices should be licensed under one of the known open source licenses, such as GPL, MIT and others.

Microsoft and IBM for example have their own speech recognition toolkits that they offer for developers, but they are not open source. Simply because they are not licensed under one of the open source licenses in the market.

What are the Benefits of Using Open Source Speech Recognition?

Mainly, you get few or no restrictions at all on the commercial usage for your application, as the open source speech recognition libraries will allow you to use them for whatever use case you may need.

Also, most – if not all – open source speech recognition toolkits in the market are also free of charge, saving you tons of money instead of using the proprietary ones.

The benefits of using open source speech recognition toolkits are indeed too many to be summarized in one article.

Top Open Source Speech Recognition Systems

In our article we’ll see a couple of them, what are their pros and cons and when they should be used.

1. Project DeepSpeech

This project is made by Mozilla, the organization behind the Firefox browser.

It’s a 100% free and open source speech-to-text library that also implies the machine learning technology using TensorFlow framework to fulfill its mission. In other words, you can use it to build training models by yourself to enhance the underlying speech-to-text technology and get better results, or even to bring it to other languages if you want.

You can also easily integrate it to your other machine learning projects that you are having on TensorFlow. Sadly it sounds like the project is currently only supporting English by default. It’s also available in many languages such as Python (3.6).

However, after the recent Mozilla restructure, the future of the project is unknown, as it may be shut down (or not) depending on what they are going to decide.

You may visit its Project DeepSpeech homepage to learn more.

2. Kaldi

Kaldi is an open source speech recognition software written in C++, and is released under the Apache public license.

It works on Windows, macOS and Linux. Its development started back in 2009. Kaldi’s main features over some other speech recognition software is that it’s extendable and modular: The community is providing tons of 3rd-party modules that you can use for your tasks.

Kaldi also supports deep neural networks, and offers an excellent documentation on its website. While the code is mainly written in C++, it’s “wrapped” by Bash and Python scripts.

So if you are looking just for the basic usage of converting speech to text, then you’ll find it easy to accomplish that via either Python or Bash. You may also wish to check Kaldi Active Grammar, which is a Python pre-built engine with English trained models already ready for usage.

Learn more about Kaldi speech recognition from its official website.

3. Julius

Probably one of the oldest speech recognition software ever, as its development started in 1991 at the University of Kyoto, and then its ownership was transferred to as an independent project in 2005. A lot of open source applications use it as their engine (Think of KDE Simon).

Julius main features include its ability to perform real-time STT processes, low memory usage (Less than 64MB for 20000 words), ability to produce N-best/Word-graph output, ability to work as a server unit and a lot more.

This software was mainly built for academic and research purposes. It is written in C, and works on Linux, Windows, macOS and even Android (on smartphones). Currently it supports both English and Japanese languages only.

The software is probably available to install easily using your Linux distribution’s repository; Just search for julius package in your package manager.

You can access Julius source code from GitHub.

4. Flashlight ASR (Formerly Wav2Letter++)

If you are looking for something modern, then this one can be included.

Flashlight ASR is an open source speech recognition software that was released by Facebook’s AI Research Team. The code is a C++ code released under the MIT license.

Facebook was describing its library as “the fastest state-of-the-art speech recognition system available” up to 2018.

The concepts on which this tool is built makes it optimized for performance by default. Facebook’s machine learning library Flashlight is used as the underlying core of Flashlight ASR. The software requires that you first build a training model for the language you desire before becoming able to run the speech recognition process.

No pre-built support of any language (including English) is available. It’s just a machine-learning-driven tool to convert speech to text.

You can learn more about it from the following link.

5. PaddleSpeech (Formerly DeepSpeech2)

Researchers at the Chinese giant Baidu are also working on their own speech recognition toolkit, called PaddleSpeech.

The speech toolkit is built on the PaddlePaddle deep learning framework, and provides many features such as:

- Speech-to-Text support.

- Text-to-Speech support.

- State-of-the-art performance in audio transcription, it even won the NAACL2022 Best Demo Award,

- Support for many large language models (LLMs), mainly for English and Chinese languages.

The engine can be trained on any model and for any language you desire.

PaddleSpeech‘s source code is written in Python, so it should be easy for you to get familiar with it if that’s the language you use.

6. OpenSeq2Seq

Developed by NVIDIA for sequence-to-sequence models training.

While it can be used for way more than just speech recognition, it is a good engine nonetheless for this use case. You can either build your own training models for it, or use models which are shipped by default. It supports parallel processing using multiple GPUs/Multiple CPUs, besides a heavy support for some NVIDIA technologies like CUDA and its strong graphics cards.

As of 2021 the project is archived; it can still be used but looks like it is no longer under active development.

Check its speech recognition documentation page for more information, or you may visit its official source code page.

7. Vosk

One of the newest open source speech recognition systems, as its development just started in 2020.

Unlike other systems in this list, Vosk is quite ready to use after installation, as it supports 10 languages (English, German, French, Turkish…) with portable 50MB-sized models already available for users (There are other larger models up to 1.4GB if you need).

It also works on Raspberry Pi, iOS and android devices, and provides a streaming API which allows you to connect to it to do your speech recognition tasks online. Vosk has bindings for Java, Python, JavaScript, C# and NodeJS.

Learn more about Vosk from its official website.

8. Athena

An end-to-end speech recognition engine which implements ASR.

Written in Python and licensed under the Apache 2.0 license. Supports unsupervised pre-training and multi-GPUs training either on same or multiple machines. Built on the top of TensorFlow.

Has a large model available for both English and Chinese languages.

Visit Athena source code.

9. ESPnet

Written in Python on the top of PyTorch.

Also supports end-to-end ASR. It follows Kaldi style for data processing, so it would be easier to migrate from it to ESPnet. The main marketing point for ESPnet is the state-of-art performance it gives in many benchmarks, and its support for other language processing tasks such as speech-to-text (STT), machine translation (MT) and speech translation (ST).

Licensed under the Apache 2.0 license.

You can access ESPnet from the following link.

10. Whisper

The newest speech recognition toolkit in the family, developed by the famous OpenAI company (the same company behind ChatGPT).

The main marketing point for Whisper is that it does not specialize in a set of training datasets for specific languages only; instead, it can be used with any suitable model and for any language. It was trained on 680 thousand hours of audio files, one third of which were non-English datasets.

It supports speech-to-text, text-to-speech, speech translation. And the company claims that its toolkit has 50% less errors in the output compared to other toolkit in the market.

Learn more about Whisper from its official website.

11. StyleTTS2

The newest speech recognition library on the list, which was just released in the middle of November, 2023. It employs diffusion techniques with large speech language models (SLMs) training in order to achieve more advanced results than other models.

The makers of the model published it along with a research paper, where they make the following claim about their work:

This work achieves the first human-level TTS synthesis on both single and multispeaker datasets, showcasing the potential of style diffusion and adversarial training with large SLMs.

It is written in Python, and has some Jupyter notebooks shipped with it to demonstrate how to use it. The model is licensed under the MIT license.

There is an online demo where you can see different benchmarks of the model: https://styletts2.github.io/

What is the Best Open Source Speech Recognition System?

If you are building a small application that you want to be portable everywhere, then Vosk is your best option, as it is written in Python and works on iOS, android and Raspberry pi too, and supports up to 10 languages. It also provides a huge training dataset if you shall need it, and a smaller one for portable applications.

If, however, you want to train and build your own models for much complex tasks, then any of PaddleSpeech, Whisper and Athena should be more than enough for your needs, as they are the most modern state-of-the-art toolkits.

As for Mozilla’s DeepSpeech, it lacks a lot of features behind its other competitors in this list, and isn’t really cited a lot in speech recognition academic research like the others. And its future is concerning after the recent Mozilla restructure, so one would want to stay away from it for now.

Traditionally, Julius and Kaldi are also very much cited in the academic literature.

Alternatively, you may try these open source speech recognition libraries to see how they work for you in your use case.

Conclusion

The speech recognition category is starting to become mainly driven by open source technologies, a situation that seemed to be very far-fetched a few years ago.

The current open source speech recognition software are very modern and bleeding-edge, and one can use them to fulfill any purpose instead of depending on Microsoft’s or IBM’s toolkits.

If you have any other recommendations for this list, or comments in general, we’d love to hear them below!

Hanny is a computer science & engineering graduate with a master degree, and an open source software developer. He has created a lot of open source programs over the years, and maintains separate online platforms for promoting open source in his local communities.

Hanny is the founder of FOSS Post.